Thursday 30 October 2025

A. Introduction

Good evening and welcome. I begin by acknowledging the Traditional Owners of the land on which we gather this evening, the Wurundjeri People of the Kulin nation. I pay my respects to their Elders, past and present, and to First Nations persons here this evening. When we come together to exchange ideas, we do so on land with a rich history of knowledge sharing that spans millennia.

I extend my thanks to the Australian Academy of Law for organising this evening’s event. I acknowledge the President of the Academy, the Honourable Alan Robertson AM SC – thank you for the kind introduction. I also acknowledge the Academy’s Patron, the Honourable Chief Justice Gageler AC, its Fellows, Directors, Office Holders, Board Committee members and other distinguished guests. My thanks to everyone joining us this evening, whether here in Banco Court or virtually from other locations.

The Academy of Law unites different facets of the Australian legal community – practitioners, academics and the judiciary – to work together for the advancement of the discipline of law in various ways, including promoting excellence in legal scholarship, research, education, practice, and the administration of justice.1

In his famous polemic, ‘The Path of the Law’, written in 1897, Oliver Wendell Holmes Jr observed that: ‘For the rational study of the law the blackletter man may be the man of the present, but the man of the future is the man of statistics and the master of economics.’2 Although Wendell Holmes was not speaking about the prosaic statistical proof of facts, but with more profound issues about the relationship between law and morals, and whether or not the law should be bounded by the past, his observation is a useful starting point for my talk tonight.3

The dominance and influence of generative AI is rapidly increasing, and we are collecting vast quantities of data about all aspects of our lives. Generative AI operates by calculating the probability of the next word (or token) given the preceding text. Each output is the most statistically likely having regard to an enormous field of information.

The utility of an AI system depends on the quality of its underlying data set and training. Similarly, the relevance and reliability of statistical evidence depends on the underlying data and method used to calculate them. Wendell Holmes’ observation is increasingly pertinent. It seems fitting to reflect on the relationship between mathematics and the law, the proper application of statistics, and their limitations.

Judges, like undertakers and liquidators, usually arrive on the scene after something has gone terribly wrong. With some important qualifications to which I will come, judges are primarily concerned with ascertaining what happened, that is they are generally concerned with past events – some relatively recent, and some many years, even decades, past. Depending on how postmodern your disposition, we generally accept that what happened in the past is fixed and it is the judge’s role to make findings as to what actually occurred. The difficulty arises where the evidence available to establish what occurred is, for any number of reasons including nefarious ones, limited, sketchy or contradictory.

In order to understand how judges go about this fundamental task, it is necessary to connect three essential aspects. The first is why fact-finding is important to the judge’s task. The second is the identity of the decision-maker and the final aspect is the process or methodology they adopt. Tonight, I am mainly concerned with the third, but one cannot get to it without mention of the first two.

The first aspect relates to the function of the judicial process, which is to fix liability or responsibility in an individual case in accordance with law. Fact-finding is a necessary precondition to the application of the law and the making of orders that determines legal liability. In other words, judicial fact-finding, and I include fact-finding by a jury, provides the basis on which legal liability is determined. Fact-finding carries with it significant consequences. It is to be contrasted with the theoretical and the hypothetical.

The second aspect is the identity of the decision-maker. Our system is one of individualised justice, in which each case is carefully considered on its merits. In Victoria, a person’s right to have a criminal charge or civil proceeding ‘decided by a competent, independent and impartial court or tribunal after a fair and public hearing’ is enshrined in the Charter of Human Rights and Responsibilities.4

Having noted those two important features, I turn to the process that is adopted by the judicial decision-maker in order to find the facts as a precondition for the making of orders.

B. Fact-finding & standards of proof: the language of proof

The law uses words that echo mathematical proof and probability theory, in particular. ‘Probability’ is ‘a likelihood or chance of something’.5 The Royal Society describes ‘probability’ as ‘a conceptual device that helps us think and reason logically when faced with uncertainty about the occurrence of a questioned event in the past, present or the future.’6 In the context of statistics, probability is ‘the relative frequency of the occurrence of an event as measured by the ratio of the number of cases or alternatives favourable to the event to the total number of cases or alternatives.’7 That is, probability describes a relationship between one set of known or assumed facts and the existence or likelihood of an unknown set of facts.8

The closest echo of probability theory is found in the civil standard of proof. In civil cases, the tribunal of fact, typically a judge, must determine what took place ‘on the balance of probabilities’. For a fact to be established, it must be considered more probable than not, meaning a probability of more than 50 per cent.9 But this assessment is not a mathematical calculation.

It is accepted that the cogency of the evidence required to establish the civil standard will vary depending on the circumstances and seriousness of the allegation to be proved. In Briginshaw v Briginshaw, Justice Dixon observed that ‘the tribunal must feel an actual persuasion of its occurrence or existence before it can be found … But reasonable satisfaction is not a state of mind that is attained or established independently of the nature and consequence of the fact or facts to be proved.’10 In that seminal passage, Justice Dixon relates the process of fact-finding back to the first integer mentioned: the reason why the fact is being found and the consequences that may follow from reaching one state of satisfaction or another. The method of fact-finding is thus intrinsically bound up with the purpose for which it is undertaken.

There is another important aspect of fact-finding that must be noticed. Because it forms the basis on which liability is fixed, it involves the rendering of the uncertain to the certain. The reaching of a state of satisfaction in accordance with the standard of proof means that the fact, once found, is taken as true regardless of where it sits on the continuum from more probable than not to certain. Even though from a mathematical perspective, there likely remains a level of uncertainty when a decision is reached, from a legal perspective the finding of fact is certain, binding and final.

In Malec v JC Hutton Pty Ltd (‘Malec’), the High Court explained the position:11

A common law court determines on the balance of probabilities whether an event has occurred. If the probability of the event having occurred is greater than it not having occurred, the occurrence of the event is treated as certain; if the probability of it having occurred is less than it not having occurred, it is treated as not having occurred. Hence, in respect of events which have or have not occurred, damages are assessed on an all or nothing approach.

The criminal standard of proof – ‘beyond reasonable doubt’ – provides an important counterpoint to the language of probability. It is the highest standard of proof that exists in our legal system, but there is no objective formula which can be applied or a percentage of satisfaction beyond which a jury must convict an accused person.

In Green v The Queen, the High Court observed that:12

A reasonable doubt is a doubt which the particular jury entertain in the circumstances. Jurymen themselves set the standard of what is reasonable in the circumstances. It is that ability which is attributed to them which is one of the virtues of our mode of trial: to their task of deciding facts they bring to bear their experience and judgment.

But even in the context of the criminal law, the notion of probability is constantly employed. The rules of evidence, which govern both civil and criminal trials, are couched in terms of probability. The foundational rule of relevance provides that relevant evidence is admissible,13 and relevant evidence is evidence that, if it were accepted, could rationally affect (directly or indirectly) the assessment of the probability of the existence of a fact in issue in the proceeding.14

Many of the rules of exclusion are based on the court’s assessment of the probative value of the evidence. The ‘probative value’ of evidence is the extent to which the evidence could rationally affect the assessment of the probability of the existence of a fact in issue.15 For example, tendency evidence enables proof of a fact in issue by reference to an established tendency to act in a certain way or have a certain state of mind: thus the trier of fact reasons from satisfaction that a person has a tendency to have a particular state of mind, or to act in a particular way, to the likelihood that the person had the particular state of mind, or acted in the particular way, on the occasion in issue.

To similar effect, in considering whether a conviction for sexual offences was ‘unsafe’ the High Court has used the concept of ‘compounding improbabilities’ to assess whether overall, the evidence allowed the jury to reach a verdict of guilt beyond reasonable doubt.16

So far, I have considered past facts where the language and the judicial method involves assessments of probability and moving from the uncertain to certain. Importantly, the language and the method shifts when courts are required to make evaluative judgments of hypothetical events. This can be seen in two areas of fact-finding: causation and predictions as to the future. Both involve a court making findings about hypothetical events. In the case of causation, it is usually through the concept of the counterfactual: what would have happened had the wrongdoing not occurred? In many ways, the language and method is even closer to a statistical or population paradigm than that adopted in relation to past events.

For example, in a shareholder class action, the parties may rely on the evidence of an expert who can assess the loss caused by a misrepresentation to the stock market – what would have happened to a share price if the relevant information had been provided?

In a case where the plaintiff was injured due to the fault of the defendant, and claims damages for loss of earning capacity, the judge or jury will need to quantify this.

The parties will often rely on evidence from an expert as to the calculation of economic loss. This calculation will typically take into account a range of factors, assumptions and contingencies, including the plaintiff’s earning capacity prior to and following the injury, the plaintiff’s retirement age, how the plaintiff’s earning capacity would have increased over time, and a discount for adverse contingencies. An expert witness may calculate several different hypothetical scenarios.

The correct approach to assessing damages for past and future losses is found in the majority judgment of the High Court in Malec. The Court explained that the probability of a future or hypothetical event ‘may be very high — 99.9 per cent — or very low — 0.1 per cent. But unless the chance is so low as to be regarded as speculative — say less than 1 per cent — or so high as to be practically certain — say over 99 per cent — the court will take that chance into account in assessing the damages.’17

In order to explore the relationship between judicial fact-finding and probability theory, and whether this language of probability reflects an underlying synergy between the law and mathematics, I wanted to give three examples to set the scene. They are different in time and place but as we will see, have some important common features.

C. The use of probability theory

Collins

The first one comes from California in the form of a 1968 case, People v Collins (‘Collins’).18

In Collins, an elderly woman was robbed by two assailants. She said that one of them was a young woman with blonde hair. Another witness had seen a Caucasian woman with dark blonde hair in a ponytail flee the scene and enter a yellow car driven by a black man with a moustache and beard. The defendants were arrested and at trial, a teacher of mathematics at a State College gave evidence about various probabilities. That evidence was based on ‘the “product rule,” which states that the probability of the joint occurrence of a number of mutually independent events is equal to the product of the individual probabilities that each of the events will occur.’19

I pause to give a simple example of the product rule. When one tosses a coin, the probability of a head or a tail is 50-50. When one tosses two coins, the probability of two heads, is the product of the two probabilities – namely, 25 per cent or one in four.

Applying that simple mathematical approach, the prosecutor had the expert witness assume individual probabilities of six characteristics:

- Proportion of black men with a beard = 1 in 10

- Proportion of men with a moustache = 1 in 4

- Proportion of white women with a ponytail = 1 in 10

- Proportion of white women with blonde hair = 1 in 3

- Proportion of yellow motor vehicles = 1 in 10

- Proportion of cars that contain an interracial couple = 1 in 1,000

Applying the product rule to the assumed values – i.e. multiplying them together – produced a 1 in 12 million chance that a couple selected at random would have the relevant characteristics. The jury convicted.

Clark

The second example is from the United Kingdom. In 1998, Sally Clark, a solicitor, was charged with the murder of her two infant sons, who had died within just over a two-year period.

The defence argued that the deaths were caused by Sudden Infant Death Syndrome (‘SIDs’). The prosecution relied on expert evidence from a paediatrician, Sir Roy Meadows, who took the frequency of two SIDs deaths happening in a family like the Clark family (1/8,543) and squared it to reach the probability of approximately 1 in 73 million.20 The witness compounded the mathematical evidence by describing the chance of the children having died naturally as ‘very, very long odds’ and that ‘it’s the chance of backing that long odds outsider at the Grand National’.21 Continuing the racing theme, he compared the odds to the chance of a punter successfully backing an 80 to 1 outsider in four consecutive years.22

In 1999, Sally Clark was convicted of both counts of murder.

Ward

The third example is from closer to home. In Ward v The Queen (‘Ward’),23 the accused was charged with, among other things, offences of armed robbery, attempted armed robbery and reckless conduct endangering life, which the prosecution alleged were committed over the course of a morning at two different locations in Melbourne.

The main issue in the trial was identification. The prosecution sought to place the accused near each of the crime scenes by showing that his mobile phone communicated with a phone tower near each location and at times proximate to the offences. To that end, they called a witness from a telecommunications company who said that a phone connected to the accused was used within a certain radius of two phone towers. That evidence was directed to explaining how a mobile phone communicates with cells located on a tower or, more specifically, a base station. He had produced a number of maps, which he described as ‘plots’, that represented the ‘calculated areas for probable and possible cell coverage’.24 Each plot, which was a road map, was entitled ‘Prediction of Probable (dark green) and Possible (light green) Cell Coverage area’ for a certain geographical area.25

Initially, the witness said that it was highly likely that the relevant phone was in the dark green zone of a tower near each offence. Asked by the prosecutor to be more precise, he said the probability that the phone was in the relevant green zone at that time was 95 per cent. The accused was convicted.

Analysis

In each of the three examples, an appeal court said that the evidence should not have been admitted. In the first two, the convictions were overturned and in the last case they were upheld notwithstanding the Court’s finding that the probability evidence was inadmissible.

So what was the problem: Was it that the maths was poor? Was it that the jury would have been unduly influenced by the numerical figures and not understood the maths; or was there something more fundamental at play? Answering these questions is critical to our understanding of the role, if any, of probability theory in the judicial determination of the facts.

On appeal, in Collins, the Supreme Court of California identified two fundamental prejudicial errors relating to the mathematical theory of probability and the prosecution’s use of it at trial:26

(1) The testimony itself lacked an adequate foundation both in evidence and in statistical theory; and

(2) the testimony and the manner in which the prosecution used it distracted the jury from its proper and requisite function of weighing the evidence on the issue of guilt, encouraged the jurors to rely upon an engaging but logically irrelevant expert demonstration, foreclosed the possibility of an effective defense by an attorney apparently unschooled in mathematical refinements, and placed the jurors and defense counsel at a disadvantage in sifting relevant fact from inapplicable theory.

There was no evidence to support the individual probabilities. 27 And even if they were correct, there was inadequate proof that the six factors were statistically independent.28 For example, the overlap between men with beards and moustaches. Multiplying non-independent probabilities together resulted in error.

Even if the 1 in 12 million chance was accurate, it was only the probability that a random couple would possess that combination of features – it was not, as the prosecutor had suggested, mathematical proof of guilt.29

Justice Sullivan observed that:30

While we discern no inherent incompatibility between the disciplines of law and mathematics and intend no general disapproval or disparagement of the latter as an auxiliary in the fact-finding processes of the former, we cannot uphold the technique employed … the testimony as to mathematical probability infected the case with fatal error and distorted the jury’s traditional role of determining guilt or innocence according to long-settled rules. Mathematics, a veritable sorcerer in our computerized society, while assisting the trier of fact in the search for truth, must not cast a spell over him. We conclude that on the record before us defendant should not have had his guilt determined by the odds and that he is entitled to a new trial.

Returning then to the case of Clark. In 2001, a couple of years after her conviction, the Royal Statistical Society (the ‘RSS’) issued a statement that the 1 in 73 million figure on which the prosecutor had relied had ‘no statistical basis’ because it was based on the assumption that two SIDs deaths in one family could be considered independent events when there were ‘very strong’ reasons to suggest that was false.31 The RSS also noted that ‘figures such as the 1 in 73 million are very easily misinterpreted.’32 Some media reports had stated this figure was the chance the deaths were accidental, an error in logic referred to as the ‘Prosecutor’s Fallacy’.33 In fact, the relevant considerations were the relative likelihoods of two deaths caused by SIDs or two deaths caused by murder.34

The Royal Society describes the ‘Prosecutor’s Fallacy’ as occurring:35

… when the probability of the evidence (matching DNA profile, glass fragment of the same refractive index as the fragment recovered from the target window, etc) given innocence (the random match probability) is incorrectly interpreted as the probability of innocence given the evidence. This is formally known as ‘transposing the conditional’, and is a clear breach of logic …

Clark appealed her convictions but her appeal was dismissed in 2000.36

In 2002, the President of the RSS wrote to the Lord Chancellor, expressing concern about aspects of the use of statistical evidence in trials, with reference to the case of Clark.37

In 2003, the Court of Appeal set aside the convictions.38

In the final case in the trilogy, Ward, the Victorian Court of Appeal lamented the fact that the provisions in the Evidence Act 2008 (Vic) regulating the admissibility of opinion evidence had largely been ignored at trial and the approaches taken to adducing and challenging the admissibility of the evidence were ‘riddled with unexplored assumptions’.39 The Court concluded that the witness ought not have been permitted to express the likelihood that a mobile phone was in a particular geographic area at a particular time in percentage terms.40 That conclusion was largely based on the fact that the prosecution had not established that the witness had the relevant expertise to express the opinion. It is also apparent that there had been no adequate explanation in the evidence as to how the figure had been derived, with the witness giving different accounts as to whether it was the output of an (undisclosed) algorithm, or in fact an output based on experience.

DNA evidence

Of course, statistical and scientific evidence is often used in court. Much depends on the fact to be proved and the acceptability of the method deployed.

Thus, unlike the three cases just mentioned, DNA evidence is an example of statistical evidence that is powerful, accepted and regularly employed, having first been used in Australian criminal proceedings in 1989.41 It follows on from earlier forms of scientific evidence such as fingerprints, and writing experts who opine on the likelihood or probability that the evidential sample identifies the accused.

Like other statistical evidence, DNA evidence is presented by experts. Expert evidence is of course an exception to the rule that evidence of an opinion is not admissible to prove the existence of a fact about the existence of which the opinion was expressed. In order for expert evidence to be admissible, the witness must have specialised knowledge based on their training, study or experience, and the opinion to be admitted must be wholly or substantially based on that knowledge.43

The debate

The case of Collins prompted debate about the proper role of mathematics in the fact-finding process. In 1970, Finkelstein and Fairley accepted that simple product theory was inadequate but proposed that in certain circumstances, where statistical evidence alone cannot identify a perpetrator, and where there is other incriminating evidence, a criminal jury can use Bayes’ theorem to identity a perpetrator.44

Bayes’ theorem ‘essentially provides a formal mechanism for learning from experience’.45 The Royal Society states that:

Bayes’ theorem provides a general rule for updating probabilities about a proposition in the light of new evidence. It says that:

the posterior (final) odds for a proposition =

the [Likelihood Ratio] x the prior

(initial) odds for the proposition46

A ‘Likelihood Ratio’ compares the relative support a piece of evidence provides for two competing hypotheses – A and B. It is the probability of the evidence if hypothesis A is true, divided by the probability of the evidence if hypothesis B is true.47 Essentially, Bayes’ theorem is a mathematical theorem that allows the initial probability of an event occurring or not occurring to be recalculated by means of a ‘Likelihood Ratio’ once further information is known.

In a Harvard Law Review Article in 1971, Laurence Tribe, then an Assistant Professor at Harvard Law School and later, one of its most eminent constitutional scholars, critiqued Finkelstein and Fairley’s proposal and outlined the costs of attempting to integrate mathematics into the fact-finding process of a trial.48 His criticisms were wide ranging, from the risk that it would distract the jury, give of excessive weight to factors that can most easily be quantified, shift focus away from elements such as volition, knowledge and intent, towards elements such as identity and occurrence, undermine the independent judgment of the jury, threaten the presumption of innocence and dehumanise justice.49

Referring to the description in Collins that mathematics could be ‘a veritable sorcerer’, Professor Tribe observed that the ‘very mystery’ that surrounds mathematics makes it both impenetrable and impressive to the lay person.50 In his view, there was a confusion in using statistics and mathematical theorems to determine what happened on a unique occasion as opposed to the more appropriate application where what is being examined is the statistical features of a population of people or events.51

Professor Tribe identified three traditional objections to the use of such statistical evidence.52 The first was the notion that probability theory is useful for predicting future events but not for identifying what happened in the past.53 This was easily discounted. The probability that a toss of a coin produces a head or tail is the same whether that question is asked before the toss of occurs or after it happens but remains concealed.

The second is more complex and it relates to the difference between mathematical evidence about the generality of cases of the kind with which the court is concerned and evidence of the particular case at hand.54 Professor Tribe thought it was possible to transform probability as a measure of objective frequency to probability as a measure of subjective belief in a particular case, drawing on Professor Savage’s subjective theory of probability.55

The third relates to the fact that mathematical evidence taken alone is unlikely to be determinative and adopting it risks distorting the trial process in a number of respects.56 Professor Tribe approached this part of his thesis by reference to the classic illustration of the fictional town where all buses belong to one of two bus companies – 80 per cent of buses are from the Blue Bus Company and the Red Bus Company has the remaining 20 per cent. If a bus negligently knocks over someone in the dark of night, the Blue Bus Company is not held liable simply because there was an 80 per cent chance that the bus was blue. Our legal system does not allocate liability based on probability either – if it did, the Blue Bus Company could be held liable for 80 per cent of the damage and the Red Bus Company for the other 20 per cent. One reason identified by Professor Tribe for not adopting that approach is that it would eliminate the need for plaintiffs to do more than establish background statistics and provide no reason to exclude the possibility that in fact the Blue Bus company was innocent.57

In answer to the use of Bayes’ theorem, which starts with a probability of an event and then modifies it by reference to further material, Professor Tribe observed that this carried the risk of embedding the first hard data point in the decision-maker’s mind, which is very hard to shift especially by more individual matters that are peculiar to the individual case.58

Ultimately, although not ruling out its use entirely, Professor Tribe concluded that there are considerable risks in using statistical or probability models to prove what happened in an individual case. Most profound of all, is the risk of dehumanising the trial process.59 In that respect, it leads the trier of fact to abandon the subjective assessment of imprecise and potentially conflicting evidence either because of the attraction of figures and certainty, or the inability to properly interrogate the mathematical data.

D. The differences between a trial and a population study

There is a distinction between the use of statistics in population studies, such as those adopted in epidemiology, and in the trial process to determine liability in an individual case. An example from the United Kingdom usefully illustrates this distinction.

Shipman

Harold Shipman, a family doctor in Manchester, was not a stereotypical mass murderer. It is very likely that between 1975 and 1998, Shipman murdered at least 215 of his elderly patients by injecting them with a lethal opiate overdose. Eventually, he made the mistake of forging the will of one of his patients. This led to forensic analysis of his computer, which revealed that he had been changing patient records to make his patients appear sicker than they were. In 2000, Shipman was convicted of 15 counts of murder and one of forging a patient’s will.60

A public inquiry was established to consider the extent of Shipman’s crimes and whether they could have been detected earlier. The inquiry concluded that Shipman had murdered 215 of his patients, and possibly 45 more.61

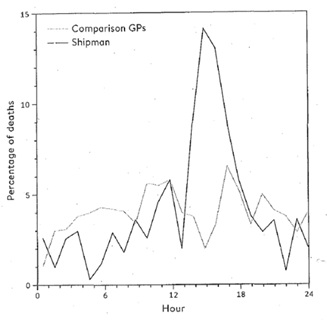

Sir David Spiegelhalter, a British statistician, gave evidence at the public inquiry. Part of the evidence included a graph which plotted the time of death of Shipman’s patients against the time of death of the population of aged care residents in northern England.62 The graph is striking:

Speaking about this graph, Spiegelhalter observed that:63

The pattern does not require subtle analysis: the conclusion is sometimes known as ‘inter-ocular’, since it hits you between the eyes. Shipman’s patients tended overwhelmingly to die in the early afternoon.

The data cannot tell us why they tended to die at that time, but further investigation revealed that he performed his home visits after lunch, when he was generally alone with his elderly patients. He would offer them an injection that he said was to make them more comfortable but which was in fact a lethal dose of diamorphine: after a patient had died peacefully in front of him, he would change their medical record to make it appear as if this was an expected natural death.

It was data from specific cases that determined Shipman’s actions, but Spiegelhalter described ‘this type of iterative, exploratory work as ‘forensic’ statistics’ which ‘supported a general understanding of how he went about his crimes.’64 Despite its general tenor it would no doubt be devastating before a jury, just as other tendency evidence can be extremely powerful.

In response to the Shipman episode, Spiegelhalter was part of a team that developed a version of a Sequential Probability Ratio Test, a statistical monitoring procedure, which could have been used to detect Shipman’s crimes earlier based on the mortality rates of his patients.65 However, a GP monitoring system that was piloted afterwards, identified a GP with higher mortality rates than Shipman. Did that mean there was another mass murderer about? Spiegelhalter explains:66

Investigation revealed this doctor practised in a south-coast town with a large number of retirement homes with many old people, and he conscientiously helped many of his patients to remain out of hospital for their death. It would have been completely inappropriate for this GP to receive any publicity for his apparently high rate of signing death certificates. The lesson here is that while statistical systems can detect outlying outcomes, they cannot offer reasons why these might have occurred, so they need careful implementation in order to avoid false accusations.

That example shows the dangers of too readily adopting population data to attribute liability or responsibility in a given case. The difference between population samples and individual cases can also be seen in the way the courts have used epidemiological evidence in the civil context.

Evidence of epidemiology

Epidemiology is the study of disease in human populations. Expert evidence of epidemiology can play an important role in tort cases where causation is disputed.

Seltsam v McGuiness concerned whether exposure to asbestos had caused renal cell carcinoma.67 Chief Justice Spigelman accepted that in determining whether exposure to a substance caused disease in a particular case, epidemiological studies can provide circumstantial evidence of possibility which may, alone or in conjunction with other evidence, establish causation on the balance of probabilities.68

The case of Merck Sharp & Dohme (Australia) Pty Ltd v Peterson (‘MSD’)69 concerned the drug known as Vioxx, which for many people provided effective pain relief without gastrointestinal side effects. Peterson was prescribed Vioxx for arthritic back pain. In December 2003, Peterson suffered and survived a serious heart attack. Peterson took Vioxx until it was withdrawn from the market in September 2004.

The main issue at trial in the Federal Court was whether consumption of Vioxx caused or contributed to Peterson’s heart attack. Based on the available data, Justice Jessup found that Peterson’s consumption of Vioxx had materially increased the risk of heart attack.70 But Justice Jessup did not go so far as finding that Peterson’s heart attack would not have occurred but for the taking of Vioxx.71

On appeal, the appellant challenged, among other things, the trial judge’s finding on causation. The Full Federal Court determined that the trial judge’s findings were not sufficient to find causation in Peterson’s favour. In order for Peterson to show that his consumption of Vioxx materially contributed to his heart attack, he needed to show that it was a necessary condition for the occurrence of his heart attack.72 In other words, his heart attack would not have occurred but for his taking Vioxx. The Full Court found that this conclusion was not open on the trial judge’s findings of fact.73

In considering the use of epidemiological evidence in determining causation, the Full Court noted that ‘proof of what may be expected to happen in the usual case is of no value unless it is proved that the particular applicant is indeed “the usual case”.’74 In this case, there were factors which placed Peterson at risk of heart attack independent of his use of Vioxx:75

In this case … there was a clear basis for concluding that Mr Peterson does indeed stand apart from the ordinary case. His personal circumstances were such that they afford a ready explanation for the occurrence of his injury independent of the possible effects of Vioxx. The strength of the epidemiological evidence as a strand in the cable of circumstantial proof is seriously diminished by this consideration. The epidemiological studies do not provide assistance in resolving the question whether it was the risk posed by Vioxx, either alone or in combination with the other candidates, which did eventuate in this case.76

Plainly, the epidemiological data would be sufficient to make decisions about whether the drug was safe or should be made generally available. But the court deals not with the population but with the individual case.

E. Conclusion

May I try to bring some of the threads together.

It would be unfair to reject the utility of mathematical reasoning based solely on the earlier examples I provided.

To the extent that the objection to the use of probability theory in a legal context is one of poor mathematics, as was the case in Collins and Clark, the answer lies in better maths. The extent to which computers will be able to synthesise and order increasingly complex and voluminous data will mean that prosecutors and other parties will not need to rely on simple mathematical formulae. The ability of the computer to identify patterns and reach conclusions as to the probability of past and future events will mean that the output will be increasingly accurate and sophisticated, although it will continue to use assumptions that may be unknowable and untested.

To the extent that the problem lies in juries or judges being befuddled by mathematics they do not understand, the exclusionary rules of evidence go some way to ameliorate the concern.

The more foundational concern lies in the present role of the judicial process in attributing responsibility based on a state of reasonable satisfaction and actual persuasion.

Chief Justice Gageler has aptly noted that ‘[t]he question for the tribunal of fact is not the abstract question of whether the fact exists but the more concrete question of whether the tribunal is satisfied at the conclusion of the contest that the fact has been proved to the requisite standard.’77 That passage embodies the three critical aspects I identified earlier: the purpose of the task, namely, the determination of concrete individual disputes; the nature of the decision-maker; and the methodology requiring proof of facts to the requisite satisfaction. These are all essential to the rule of law which attaches responsibility for established breaches of the law.78

Although standards of proof in Australian law are couched in terms of probability, the determination of the matter rests in the judge forming a state of actual persuasion bringing to bear the evidence in the context of human experience. This human dimension explains why Australian law rejects a mathematical approach to fact-finding.79

A purpose of the process is to provide a sound basis for the attribution of liability and in turn this requires the transformation of an allegation to a finding. Such a finding is necessary so that there is a rational and accepted basis on which a transgression of the law can be established. To attribute liability based on population data might produce a defensible outcome but it would lack the normative force that the rule of law requires. To assign a statistical value to ‘beyond reasonable doubt’ would be to expressly tolerate a system that wrongly convicts a certain percentage of people.

There remains of course an inherent limitation in converting the uncertain to certain. Many of the limitations are found in human frailty: bias, differing subjective experience, ignorance, and other limits lie in the availability and integrity of the information and data that is brought to bear. Probability theory and rigorous statistical methodology might compensate for some of these flaws but they do not seek nor require certainty. They are comfortable with a range of potential outcomes that allow a range of available responses. But their adoption in substitution for the judicial method risks altering the nature of the process so that it is no longer recognisable as the final and binding determination of legal responsibility by a court.

Findings of fact lead to the making of orders which authorise the executive to act, including in ways which have the most serious impact on individual rights, such as incarceration and eviction from property. Judges are empowered to make orders based on legislation, common law and the inherent jurisdiction of the courts. Juries too have absolute power to determine guilt based on the facts presented to them. Courts are human institutions, and the judgments reached are human, and reflect the experience and values of the community. No one considers that our system is perfect or never results in error, but it is the best system we have. At its core, our legal system is one which affords individualised justice to all. I agree with Professor Tribe that to allow the deliberative process, that is the reaching of the critical state of satisfaction that the law prescribes, to be performed by mathematics or entrusted to an algorithm risks dehumanising the process.

Thank you for listening.

[1]Australian Academy of Law Constitution, [4].

[2]Oliver Wendell Holmes Jr, ‘The Path of the Law’ (1897) 10 Harvard Law Review 457, 11-12.

[3]See, eg, Dan Priel, ‘Holmes’s ‘path of the law’ as non-analytic jurisprudence’ (2016) 35(1) University of Queensland Law Journal 57.

[4]Charter of Human Rights and Responsibilities Act 2006 (Vic) s 24(1).

[5]Macquarie Dictionary (2025) ‘probability’ (def 2).

[6]The Royal Society and the Royal Society of Edinburgh, The use of statistics in legal proceedings: a primer for courts (November 2020) 10.

[7]Macquarie Dictionary (2025) ‘probability’ (def 4).

[8]D H Hodgson ‘The Scales of Justice: Probability and Proof in Legal Fact-finding’ (1995) 69 Australian Law Journal 731, 733.

[9]See, eg, Commonwealth v Amann Aviation Pty Ltd (1991) 174 CLR 64, 123 (Deane J); [1991] HCA 54.

[10](1938) 60 CLR 336, 361¬-2; [1938] HCA 34.

[11](1990) 169 CLR 638, 642-3 (Deane, Gaudron and McHugh JJ); [1990] HCA 20.

[12]Green v The Queen (1971) 126 CLR 28, 32-3; [1971] HCA 55.

[13]Evidence Act 2008 (Vic) s 56.

[14]Ibid s 55.

[15]Ibid s 3.

[16]Pell v The Queen (2020) 268 CLR 123; [2020] HCA 12. See also Cavanaugh (a pseudonym) v The Queen [2021] VSCA 347, [212]-[217] (Walker JA).

[17]Malec (1990) 169 CLR 638, 643 (Deane, Gaudron and McHugh JJ); [1990] HCA 20.

[18]People v Collins 68 Cal 2d 319 (Cal, 1968).

[19]Ibid 325.

[20]R v Clark [2003] EWCA Crim 1020, [96] (‘Clark’).

[21]Ibid [99].

[22]Ibid.

[23](2018) 55 VR 307; [2018] VSCA 80.

[24]Ibid 311 [22].

[25]Ibid.

[26]Collins 68 Cal 2d 319 (Cal, 1968), 327.

[27]Ibid.

[28]Ibid 328-9.

[29]Ibid 329-31.

[30]Ibid 320.

[31]The RSS, ‘Royal Statistics Society concerned by issues raised in Sally Clark case’ (News Release, 23 October 2001).

[32]Ibid.

[33]Ibid.

[34]Ibid.

[35]The Royal Society and the Royal Society of Edinburgh (n 6) 22.

[36]R v Clark [2000] 10 WLUK 1.

[37]Letter from the President of the RSS to the Lord Chancellor, 23 January 2002.

[38]Clark [2003] EWCA Crim 1020. After Clark’s appeal was dismissed, medical evidence was discovered, which suggested that one of her sons may have died from natural causes, casting doubt on both convictions. The new evidence was referred to the Criminal Cases Review Commision who referred the case to the Court of Appeal. The Court noted at [180] that if the statistical evidence had been fully argued it ‘would, in all probability, have considered that the statistical evidence provided a quite distinct basis upon which the appeal had to be allowed.’

[39]Ward (2018) 55 VR 307, 327 [93]; [2018] VSCA 80.

[40]Ibid 327 [92].

[41]Jeremy Gans and Gregor Urbas, ‘DNA identification in the criminal justice system’ (Australian Institute of Criminology, Trends & issues in crime and criminal justice, No. 226, May 2002) 4-5.

[42]Evidence Act 2008 (Vic) s 76.

[43]Ibid s 79.

[44]Michael O Finkelstein and William B Fairley, ‘A Bayesian Approach to Identification Evidence’ (1970) 83(3) Harvard Law Review 489.

[45]David Spiegelhalter, The Art of Statistics: Learning from Data (Pelican Books, 2019) 307.

[46]The Royal Society and the Royal Society of Edinburgh (n 6) 21.

[47]Spiegelhalter (n 45) 315; The Royal Society and the Royal Society of Edinburgh (n 6) 17.

[48]Laurence H Tribe, ‘Trial by Mathematics: Precision and Ritual in the Legal Process’ (1971) 84(6) Harvard Law Review 1329.

[49]Ibid.

[50]Ibid 1334.

[51]Ibid 1338-9.

[52]Ibid 1344-50.

[53]Ibid 1344-60.

[54]Ibid 1346-49.

[55]Ibid 1346.

[56]Ibid 1349-50.

[57]Ibid.

[58]Ibid 1360-5.

[59]Ibid 1375-6.

[60]Spiegelhalter (n 45) 1-2.

[61]Dame Janet Smith, The Shipman Inquiry, First Report, Volume 1: Death Disguised (July 2002), [15], [22].

[62]Spiegelhalter (n 45) 5.

[63]Ibid 4-6.

[64]Ibid 6.

[65]Ibid 292.

[66]Ibid 294.

[67]Seltsam v McGuiness (2000) 49 NSWLR 262; [2000] NSWCA 29.

[68]Ibid 274 [78]-[79]; 276 [89]; 278 [98]; [102].

[69](2011) 196 FCR 145; [2011] FCAFC 128.

[70]Peterson v Merck Sharpe & Dohme (Australia) Pty Ltd (2010) 184 FCR 1, 200 [476], 233-4 [568]–[570], 234-5 [572]; [2010] FCA 180.

[71]Ibid 299 [772].

[72]MSD (2011) 196 FCR 145, 171-2 [104]; [2011] FCAFC 128.

[73]Ibid 172 [105].

[74]Ibid 172 [106].

[75]Ibid 163-4 [78]-[79]; 165-6 [86]; 177 [120].

[76]Ibid 174 [113].

[77]S Gageler, ‘Alternative facts in the courts’ (2019) 93 Australian Law Journal 585, 589.

[78]Ibid 590.

[79]See, eg, R v GK (2001) 53 NSWLR 317, 323 [26] (Mason P); [2001] NSWCCA 413; R v Galli (2001) 127 A Crim R 493, 505 [55] (Spigelman CJ); [2001] NSWCCA 504.